Second Pass: Low-Power GPS device. Project Pathfinder with SHARP Memory display

- terrycornall

- Jun 3, 2022

- 50 min read

Updated: Jun 18, 2025

I am a retired electronics geek with some experience writing software for small microcontrollers and I love the idea of putting that to work in the field of hiking. I guess the main target will be navigation devices using GPS and maps. (I can't think of anything else I want to lug around out in the bush that needs batteries and microcontrollers. Except camera timer or motion stages for astrophotography gear, communications, temperature logging in the snow, torches, e-readers, heaters, rechargers, solar power, drones... oh all right, maybe there might be more than navigation stuff.)

NOTE: Unlike my hiking and running and other sporty endeavors, this blog is pretty dry and technical. It is also incomplete as I make no effort to put all the gritty details into it. It's more about the concepts, thoughts and results than about exactly how to do it. Expect some ranting though...

I had a bash at the handheld GPS mapping receiver using e-ink and whilst it looked good and worked OK, the display speed was causing too many issues. SO this is a second go at it using a low-power monochrome transflective from Sharp. It's monochrome, did I mention that? There are smaller, watch-sized versions of it that have color but they are too small for me. There's supposed to be color devices made by JDI in this size but I am having a hard time finding suppliers. So I'll see what I can do with mono. It's faster and less memory intensive anyway...

A New Hope. Feathering my Nest and Sharp Memory Display.

Despite getting the e-ink Pathfinder more or less working, I am not entirely happy with the e-ink display (too small, too slow) so I got a Sharp Memory display from Adafruit. It's bigger at 2.7", more pixels at 400 x 240 and much faster but unfortunately only monochrome. Uses a non-backlit transflective LCD which is supposedly even more current miserly than the e-ink, although it doesn't keep the display when off completely. I connected it to a Feather Express board (uses an M4 processor similar to the one on the ItsyBitsy)

I tested it in Circuitpython and time to draw the map from file is about the same, but displaying the buffer is much much faster and nicer, compared to the e-ink version I implemented on the ItsyBitsy. I was very disappointed that although there is displayio support for the display in Circuitpython it won't be usable for the map. It relies on drawing objects that hang around after being displayed, just in case you want to modify them on the fly, like sprites. This is great but chews up the memory way too fast and by the time I get a few thousand line-objects down to form a map it runs out of memory. And I mostly don't need the smart objects, really. Sure, they have some nice features like a function called 'contains()' that you can query to see if a point falls inside a shape, but I don't need that most of the time. Object Oriented Programming is all very well, but it is expensive and slow. So I had to use primitives just to draw into a bitmap, almost exactly as I did for the e-ink display. Worse, it looks like I'd have to re-invent things like filled_triangle() and thickAngledLine() functions. Aaaargh. (Note that I found a way to use displayio after all, with a bitmap for the map and labels for text and crosshair. Still had to write my own code for a thick line though, but that was made easier using a Bresenham generator. Read on.)

Now I was hoping that I could get the best of both worlds by using a compiled C module to draw the map into a bitmap and pure Circuitpython for all the interactive and database stuff. See Overview | Extending CircuitPython: An Introduction | Adafruit Learning System However, looking at the 'simple' example brought back nightmares and convinced me that it would be an exercise in frustration.

Worse comes to worst, I suppose I can fall back entirely to Arduino and its C++. There are drivers for this display, the gps, the microSD and JSON files. It's just the database-ey stuff I am trying to avoid in C++ thought there are probably exemplars for that I can work from too. Sigh.

For now, I am exploring what I can do with Circuitpython and displayio. It does have some nice features, like text labels you can float around at will. I used an @ sign to show the 'you are here' position. Like it? The @ is quite scaled up so looks a little fuzzy. I could design a special symbol for the purpose if I wanted too, similarly for huts and so-on as well so I can turn them off and on easily. Multiple display pages are managed by the displayio class and so it is a doddle to flip quickly between displays of things of interest, like roads, rivers, huts, labels, terrain shading (there is a built-in Floyd–Steinberg dithering - Wikipedia thing to shade areas more or less densely to approximate shades of grey). Noice! Actually I ended up writing my own FS shader for C++. It worked OK.)

I used a Bresenham generator from bresenham · PyPI for making lines and then added code to thicken up the lines. It's fairly slow, but not much worse than the bitmaptools-draw_line and looks much better.

Have found that I can blink labels nicely simply by changing the label text, say from a X to a ? once every half second and then back the next time. The choice of what chars to use when blinking can convey status. Like 'Still looking for a fix'.

In fact it is easy to set it up to display characters in a string one after another at 0.5 seconds that spell out a message like 'DONTPANIC' Kinda fun.

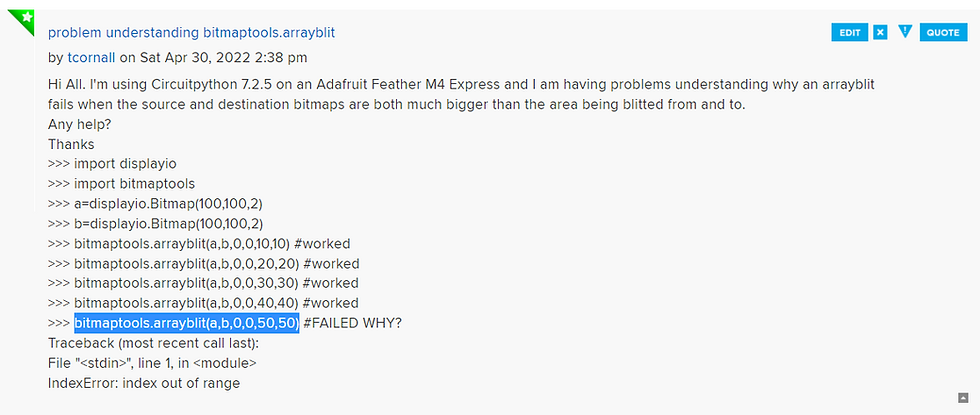

I am playing with blit at the moment but I ran into a problem and asked the Circuitpython forum for help. We'll see. Maybe I missed something really obvious... (I did. I should use bitmap.blit rather than bitmaptools.arrayblit.)

The Scroll of Names

Busily writing code to test the new display with flashing icons and blitting inverted rectangles and flashing labels to see how to overcome the monochromaticity issue, I developed one idea that has a lot of promise. I had the drawmap() use a bitmap_label (See https://docs.circuitpython.org/projects/display_text/en/latest/examples.html#label-vs-bitmap-label-comparison) to show the text of each way's name as it was drawn. (I was trying to use a label for each way but that killed the memory! Labels are very expensive, use with caution.)

Because the display refresh is blindingly fast compared to e-ink, this worked very well and demonstrated that if the list of way names and grid coords were kept as a (more efficient than labels) dict keyed by wayname then a scroll wheel or similar could move through the dict very effectively and show each way's position very smartly and clearly. Also it keeps the map nice and clean. This goes to the heart of my problem that prompted me to start this project. Exciting stuff!

Don't remove this label

I am pleased by the use of labels in Circuitpython displayio, being able to change their text and position and hide them makes them really useful.

However something I observed is that if I have multiple bitmaps (which needs multiple tilegrids) in a group (or a group with a tilegrid/bitmap within another group) it slows things down even if all but one bitmap/tilegrid is hidden. This makes me sad because I was hoping to swap bitmaps in and out this way. But no. Pooh.

Snakes on a plane- Animating a course

On the other hand, I've found that an effective tool for emphasis (because I can't use color) is animation. I loaded a GPX (actually cut-down GEOJSON) track and animated it using the invert function repeatedly, running around the GPX course on the map. It looks a bit like the old-fashioned snake game but does draw the eye to the track very well.

I envision using a series of line-types, like 'footsteps', 'chevrons' 'dots' and others to differentiate track types. I.e map, track we should be following, track where we actually have been... Could use 'waterdrops' for rivers.

One of the neat things about invert drawing is that if you do it and then undo it properly you only need one bitmap. However, you probably only want one track being animated at a time otherwise everything would slow down. And you'd have to be careful about making sure everything is undone in the correct order. Maybe have a copy of the map to restore from would be faster.

Tilt!

Another idea is to use a marching label or bitmap rather than a line-style. Doesn't leave a trace like a linestyle. Maybe a combination of both, because the labels don't interfere with the self-erasing that the invert (or xor) function provides. Hmm. More computation=more energy used, but no need to animate if no-one is looking. Could use a tilt-sensor (accelerometers are a dime a dozen these days) to detect when its being held flat and looked at compared to being upright as it would be when not in use. In fact, if I used an ESP32 with the low-power ULP processor, it could monitor the accelerometer using I2C and only wake the main processor when it detects the user looking (i.e. the tilt goes horizontal for long enough) Would want to detect non-motion though, to turn it off again in case it gets put down flat. Potentially dodgy.

Another thing the accelerometers could be used for is panning, but I have a dislike of the 'gestures' feature that often comes with smart watches that does just that. Give me real touchpad/button/joystick/scrollwheel any day. Though with big fat gloves on....

Marauder's Map

I went a bit crazy testing out modes of blinking things on the map. I tried a method that read a small .bmp file in from disk and used that as a rotatable mask to invert the bits under it on the map, then invert them back to make an icon that erased itself. Then I animated it and made it bounce of walls. Thought it would be fun to make it look like a footprint. Here's the result. First some code (It's not complete but you ought to get the gist)

def marauders_map(display, thismap, bmp0, label0):

#read a bitmap I made earlier

iconbmp,iconPalette = adafruit_imageload.load('/sd/bitmaps/foot.bmp',

bitmap=displayio.Bitmap, palette=displayio.Palette)

#for some dumb reason the foot.bmp is inverted.

#We should be able to fix this by swapping palette

#but that didn't work so we brute force it

#tmp=iconPalette[0]

#iconPalette[0] = iconPalette[1]

#iconPalette[1] = tmp

for m in range(iconbmp.width): #can't iterate iconbmp, sadly

for n in range(iconbmp.height):

iconbmp[m,n] = not iconbmp[m,n] #invert it

thismap.drawmap(bmp0, 1) #use color index NOT color

dsp_w,dsp_h = display.width,display.height

dsp_cx,dsp_cy = dsp_w//2,dsp_h//2

x,y = dsp_cx,dsp_cy #start in the center of display

label0.hidden = True #hide the splash text

icon_w = iconbmp.width

icon_h = iconbmp.height

icon_cx=icon_w//2

icon_cy = icon_h//2 #Centre of icon bitmap

max_icon_dim = max(icon_w,icon_h)

#if rotate around 0,0 then dimensions of rotated_mask needs to be

#twice as much as the largest dimension. If I rotated around the

# centre this would be

#half as much in each axis and copy faster.... hmm, how to do that?

# Ahah! use px,py in rotozoom!

rotated_mask = displayio.Bitmap(max_icon_dim,max_icon_dim,2)

rm_w=rotated_mask.width

rm_h=rotated_mask.height

rm_cx = rm_w//2

rm_cy = rm_h//2

pi=math.pi

pi2=2*math.pi

#avoid starting horizontal or vertical

angle = pi/4 + (pi/5)*random.random()

#Nowwork out what steps in x and y

dx,dy = int(20*math.cos(angle)), int(20*math.sin(angle))

changlex=False #didn't his a vertical wall yet

changley=False #don't hit a horizontal wall yet

while True:

display.show(pages[0]) #show me the <del>money</del> map

#change map offset and zoom so we can see it moving

#want to walk in a directionand when hit edge,

#change direction by reflected angle

if changlex or changley: #did we hit a wall last time

oldangle=angle

if changlex:

angle = pi - angle

changlex = False

if changley: #both might happen in a corner.

angle = - angle #bounce

changley = False

dx,dy = int(20*math.cos(angle)), int(20*math.sin(angle))

x += dx #calc next step

y += dy

#now find walls and stay away from edges

#else get source range error

#also, want to change dir when we hit a wall

if x < rm_cx:

x = rm_cx

#hit a vertical so change angle to -theta

changlex=True

elif x >=dsp_w-rm_cx:

x = dsp_w-rm_cx -1

changlex=True

#hit a horizontal so change angle to pi-theta

if y < rm_cy:

y = rm_cy

changley=True

elif y >=dsp_h-rm_cy:

y = dsp_h-rm_cy-1

changley=True

#put rotated footprint in rotated_mask in centre

#BUT FIRST clear the destination because it will

# have the rotated mask left over from last time

rotated_mask.fill(0)

display.auto_refresh=False #Doing this reduces the flicker.

bitmaptools.rotozoom(dest_bitmap=rotated_mask,

source_bitmap=iconbmp,

ox=rm_cx, oy=rm_cy, px=icon_cx,

py=icon_cy, angle=angle)

#Then Use rotated_mask as the mask to find bits in the map to flip

invert_from_mask(rotated_mask,bmp0,x,y)

#sometimes needs two refreshes. Go figure

if not display.refresh(minimum_frames_per_second=0):

display.refresh(minimum_frames_per_second=0)

time.sleep(0.5) #wait a bit to slow it down.

#now flip bitsback

invert_from_mask(rotated_mask,bmp0,x,y)

display.auto_refresh=True #auto on again before it complains

#time.sleep(0.001)

#go around again

def invert_from_mask(msk,bmp,x,y):

m_w= msk.width

m_h = msk.height

m_cx=m_w//2

m_cy=m_h//2 #place the centre of the mask at x,y

for a in range(m_w):

for b in range(m_h):

if msk[a,b]:

bmp[x-m_cx+a,y-m_cy+b] = not bmp[x-m_cx+a,y-m_cy+b] Ant Tracks

A thought occurred to me that might solve both the 'how do I emphasise a track in monochrome' and 'how do I label a track' at the same time. It is to have the name crawl along the track, one (or maybe 2 or 3 etc) letter at a time, using a traveling label. I have noticed that using labels is less expensive than bitmaps. Dunno why but it saves about 10mA. (i.e. code that shows a map and marches another little bitmap around it costs 20mA but if I march a label instead it only costs 10mA. Go figger)

I'll code it up and see how it looks. Code is pretty easy as I now have Python generator for lines along a 'way' or track, so all I need to do is position the next letter in the track name at the next point on the path. Simples. I can even rotate the letter to reflect the direction of travel, though that might be worse, unless you like reading upside down. I'll play and see.

Battery Measurement

There's a problem trying to measure the battery voltage. Although an input to the Cortex M4 is provided with the battery voltage divided by 2 and it can be read using an ADC (AnalogIn(board.BATTERY) in Circuitpython) it doesn't work properly if you try to use the 3.3V out of the voltage regulator as the AREF voltage because when the battery gets low it won't necessarily BE 3.3V anymore. Y'see the problem? You need to cut AREF pin away from 3.3V and instead supply a reference voltage LOWER than the 3.3 which can be got even when the battery voltage is low enough that the voltage regulator is 'drooping'. That needs something like a Max6006 2.5V reference chip. Choose one that has a low current need. Jaycar has a LM336 but it needs like a mA to work. OK-ish, I guess but 1uA would be better...

This has a knock-on affect to any other ADC you are using (like for the keyboard) You'd need to replace the 3.3 used in the conversion formulae with 2.5 (or whatever you end up using)

MAYBE A WAY TO DETECT LOW BATTERY!!!

Circuitpython has a microcontroller module that gives the cpu voltage. My hope is that it is measured using an internal bandgap reference and will be correct even if the supposed 3.3v supply is low. You get it by:

>>>import microcontroller as mc

>>>print(mc.cpu.voltage)

3.31917If so I can use it to detect low battery voltage. If it is > 3.3 then trust the (AnalogIn(board.BATTERY) to give battery voltage and if it s <3.3 don't. (or maybe 3.2 to give some leeway for regulator variance)

Novram

The Feather m4 express has 8k of non-volatile ram available! I could use that to hold some state during deepsleep if I end up using that. It is accessed via:

>>>import microcontroller as mc

>>>print(len(mc.nvm))

8192

>>>print(mc.nvm[0])

255

>>>mc.nvm[0]=2

>>>print(mc.nvm[0])

2This is not the same as the alarm.sleep.memory (of which the Feather M4 has none) but it is non-volatile and would persist between power cycling. 8K is quite a bit. What could I use it for? Last fix coordinates is the obvious one to help find the right map on powerup before a new fix.

Wax on, Wax off... Power saving by disabling GPS between fixes.

So far I haven't had a lot of luck with deepsleep on the Feather. Deepsleep seems to work (in that it turns off the CPU and resets it after a timeout) but I can't keep the GPS disabled during sleep because the oupput pin from the cpu goes high impudence and the EN pin on the GPS is pulled low and so it goes on. I'd need to pull that resistor off the GPS board, or pull it to 3.3V instead of GND.

Lightsleep seems not to work. It just wakes up immediately and keeps on going. It is supposed to pause for the given time and then continue but the pause doesn't happen. Will play further sometime.

Another thing that is NOW working is keeping the CPU active and just turning off the GPS for 300s after a fix. Saves a lot of current. Initially it seemed never to get a new fix. Says it has but the timestamp doesn't change so it can't be. Just can't believe the gps.has_fix or gps.update() returning True to indicate a new fix. Have to check the timestamp and only disable the GPS after a real new fix otherwise you never get one. Seems to take on the order of several minutes to get a real new fix, at least when inside the house. First real fix after startup is similarly quite slow. (again, inside the house) NOTE: This was caused by not polling gps.update()at the 'right' frequency. Has to be much faster than once per second. 200ms between polls?

This all means that I may as well turn the device completely off between required fixes and wait the minutes it takes to re-acquire on startup.

And another thing. The battery is taking FOREVER to charge. There is a resistor I can change to speed it up. Might look into that.

Never take the first offer. Is the fix stable just out of disable/standby?

I had seen when I first started using it that the the GPS fixes from the Featherwing Ultimate GPS were very stable compared to the previous Adafruit Ultimate GPS that I was using with the ItsyBitsy. That is, until I started disabling or standby-ing the gps between fixes for 5 minutes. Then I saw that it was very unstable, especially when left overnight. So I decided to NOT take the first gps fix after re-enabling the GPS but instead the 5th. We'll see what that does for consistent results. Observations show so far (testing with a known fixed position) that the first fix after coming out of disable/standby is almost always the worst!

Well, taking the nth fix rather than the first one works to improve things, but doesn't eliminate the occasional 'bad' fix which is out by hundreds of meters. I'd say that using the idea of disabling the gps for any time between fixes definitely makes the accuracy and consistency worse. (Probably why the UltraTrac method on the Garmin Fenix is so bad)

However, the stats are revealing. Looking at the fixes that come in after re-enabling, it is almost always the first one that is worst.

By using the disable (which turns off the power-supply FET to the GPS), I am essentially turning the GPS off and on. There are also command sequences one can use to put the gps into standby without powering it down. They cut the current almost as much as a disable does and are 'kinder'.

I've been watching a default 0,1,1 setup (which means no gps disable, 1 second update time and take the first fix) and it appears rock-solid so far, after some 12 hours, with no points more than 3m or so from 'home'. Far, far better than the 10s or hundred of meters I saw previously when using gps disable between fixes. This is the gold standard, but it uses a lot more power than having the GPS in standby or disabled most of the time.

Best of Both Wordls?

Here is a table of things I tried to improve both accuracy and power saving using the built-in abilities of the gps for periodic standby and also the gps_disable.

It seems all the standby or disable schemes cause poor accuracy on the first and second fixes afterwards. 3rd fix is looking best.

Reference for PMTK commands PMTK command packet-Complete-A11 (rhydolabz.com)

test | Accuracy? | improve powersaving | comment |

standby before disable | first fix after is worst | yes. Is it better than just standby? Need test | PMTK161 and gps_disabe pin |

cold start every time after renable | Slow to get a fix after | yes | PMTK103 |

don't disable, no standby | Best, even the first, though second might be better? | NO | Best for accuracy, worst for power savings... |

periodic standby under gps control no disable | First fix is worst | YES | PMTK225,2,.... |

standby under code control instead of disable | first fix is worst. Is accuracy better if don't disable at all? Take the nth fix. n is prob 3 | YES | PMTK161 and don't disable |

just disable | first fix is worst. Is accuracy worse than using standby command if you take the nth fix? | yes | |

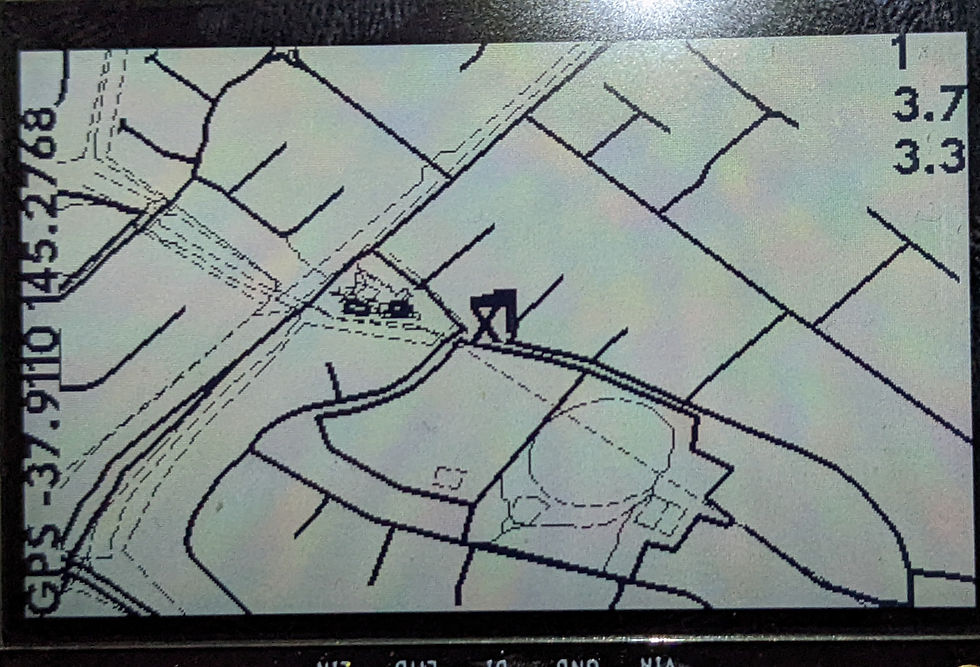

Here's a movie that shows how accuracy improves with number of fixes. The 'X' is where I should be and the 'A' shows how it gets closer and closer with number of fixes (up to 20 at 1 sec intervals) after re-enabling the GPS. BTW, the size of this video was 135MB out of the camera and I cut it down to 1.2MB with DaVinciResolve by clicking on deliver in the bottom taskbar and choosing MP4 and 720x576 PAL in the video options, and restricting the bitrate to 1000 kbps. Then Add to Render Queue at middle left hit Start Render at middle right. Not a lot of difference in video quality either. I sped it up by 3 as well. (Nice Youtube tute at HOW TO EXPORT VIDEO IN RESOLVE 17 - DaVinci Resolve Rendering Tips - YouTube by Casey Faris)

I'm running out of memory... and so is my software

I can't do much about my personal memory (that's what sticky notes are for) but for this project, gc.collect() and gc.mem_free() are my friends. Call gc.collect() before drawing the map as that needs large-ish chunks of memory. Call it again after returning from the function that draws the map, to clean up all the now unused objects.

Also, making the map bitmap a bit smaller than the display width (I am putting labels down the side after all and the map doesn't need to be under them)

I also need to keep in mind that there's a 8k nvm non-volatile memory available. It's not for ordinary use and needs to be accessed like a byte array, but 12K, that's huge!

Don't import libs that you don't need. Some of them eat up memory. Also you can just import the stuff you need like from thingy import that_thing_i_need rather than import thingy

HOWEVER, counterintuitively, sometimes doing this can actually decrease your free memory. Dunno why, but it does. Test it every time.

Fonts are kept in memory, and they are pretty big too. e.g. loading font = bitmap_font.load_font("fonts/LeagueSpartan-Bold-16.bdf") uses 8k. Consider using the from terminalio import FONT (256 bytes) Doesn't look as nice but is servicable. (Nah, didn't like it )

Turning off all my diagnostic print('some string about something') saved 3800 bytes. Neat.

The big breakthrough came when I simply did del(self.thismap) and gc.collect() before creating a new map with self.thismap=mapSharp.map(blah blah blah). Apparently, despite having at least 40K of ram available, I couldn't get a 2048 byte chunk needed during reading in of the map 'ways' one at a time. Manually cleaning up the mapSharp.map object and doing garbage collection first resolved this. (However, read on for a side effect of trying to manually use gc.collect() too often!)

Another thing I did is to make the dict that each 'way' gets read into into a persistent object by making it a member of the app class. I'm hoping that protects it somehow from memory fragmentation by causing it to grab a large enough chunk of memory early and hold onto it (I can't find anything to let me verify that. uheap seems to have disappeared). I'm beginning to see the perils of letting Python manage the memory now rather than doing it myself a-la C and C++... Hmmm, yet another reason (apart from speed) to go back to Arduino-world.

Is it possible I've hit the limit on what advantage Circuitpython gave me for 'rapid' development and it's time to take those lessons back to C++? The time is definitely drawing nearer because I can't seem to add features without hitting dynamic memory management problems.

What I need are SHARP Momory display, json, gfx and gps libs. Arduino has them all.... Dang it, I am almost there too. Really just user-interface to go, but as soon as I include reading in way labels, the mem probs will come back.

It hurts my mo' to go that slow

I was annoyed by how long it takes to draw my maps from SD card, so I did some tests to see how much I could speed it up by changing the drawing routines. To my astonishment, I found that bypassing the drawing (i.e. pretending I had optimised it all away) DID NOT speed anything up. So then I looked at the code further and the only remaining thing is how long it takes to read the 'ways' from the microSD. It takes 90s to load and draw a 1.8MB map. (Karoo, for reference) If that's all read, it's a transfer rate of about 20000 bytes per second. The microSD is supposed to be about 80MB/s. So something is askew....

I tried to speed up the SPI bus connection by asking for a Baud rate of 640_000_000 bps (if I ask for much more it fails so I guess that's the best it can do). Still sloooow.

On closer inspection I saw I was still (and unnecessarily) doing gc.collect() every 'read next way' cycle when creating the map object. Removing that reduced map load and draw to 30s. That's a lot better but still far from 80MB/s raw read speed... What else am I doing in that cycle? Not much. I can shave a few seconds total by not drawing anything but what's the point of that?

Still, it's better than it was before I went bug hunting. We'll take that for a win. Maye I need to investigate various microSD cards and find a faster one.

The Last Jedi ( I used The New Hope already...)

Moving back to Arduino-Land with new display and MPU

Sigh. Memory fragmentation by Circuitpython's dynamic memory (mis)management defeats me. I gave in and moved back to C++ Arduino-land with my Feather M4 and the Sharp Memory display.

After fighting with a mistake in the display setup that left it thinking it had to do a software SPI even though using the standard SPI pins (my mistake really) and so clobbering the SD card reader, I got it to read and draw a map. It's pretty fast at 5 seconds for the same map that took 15s to draw using Circuitpython. And it's drawing the entire map, not just a 2 or 3km section around 'home', so it's even faster than it appears. I'll just have to be happy that a lot of the work I previously did with the e-ink display in Arduino-land translated to the Sharp Memory Display without too much kerfuffle.

GPS is working too and best of all I can use a timer interrupt for polling it. (Couldn't use interrupts in Circuitpython, so that's a plus, though I lose asynchio so that's a minus)

Currently, after loading the map and drawing it, to get the street names I read the mapfile again. I'll change that code to get them at the same time as the coordinates and save a few seconds. I'll put in some code to see how the memory fares and see if it is worth pursuing this at all or if I am just going to run into the same lack of resources as I did with the Circuitpython stuff. I bet I don't. I bet it has plenty of memory and none of the mem alloc problems I got with Circuitpython. Grrr.

It is using a DynamicJSON however, so I might be wrong.... No, because I specify the max size of the re-usable 'way' document with DynamicJsonDocument wayDoc(30000) and it didn't bitch. (30_000 is actually a teensy bit more than necessary from what I've seen so far, but you never know)

After a few weeks beavering away I can see that the memory is MUCH better used in the C++ with static allocation. I end up with 140KBytes of stack-heap left after reading and drawing a map whereas in the CircuitPython version I was lucky to have a badly fragmented 30KByte left. C++ wins on speed and memory friendliness. Also easier to debug with the JLink and VSCode, so I guess I have to be happy. (Except when I run into some uninitialised pointer thing or a string buffer overrun that clags the entire system....)

INVERSE

The inverse pixel drawing operation is useful because it erases itself, but it does require reading the pixel buffer memory rather than just writing to it. The cost is worth it. I tried to shoehorn it into the existing Arduino Adafruit_SharpMem class using subclassing of the drawPixel operation but ran into the issue that functions of the base class would use the base class's drawPixel rather than the derived classes. So I gave up on subclassing and just copied the Adafruit_SharpMem class and added the INVERSE operation to its drawPixel(). It gets done when a color of 2 is asked for. (The display only has colors 0 and 1 so 2,3, etc can be used for other unitary operations on the pixel value that is already there, like 'invert it' for example.

The main reason I did this is because I had put together a vector text drawer and wanted to be able to draw rotated strings onto the map and then undo them.

Unfortunately, drawing vector text using INVERSE doesn't look so good because there are overlapping strokes and the overlapped bits come out white. So I need to be able to draw into a small bitmap using BLACK and then copy its black bits into the main bitmap using INVERSE. Either that or stop messing about and implement/steal some blitting code for this display in C++. Given that it looks like I'll need something like that anyway, I think the ability to change the target bitmap for drawing stuff plus a rotZoomBlit() function to copy one bitmap onto another and keep/restore a backup of the affected area is next. Shouldn't be too hard on the memory as the label bitmap doesn't have to be huge.

Adafruit GFX Library: GFXcanvas1 class might be useful here. (I think this might already be used under the covers by Adafruit_GFX and hence Adafruit_SharpMem display class...) Examples of its use can be found in Adafruit_GFX.cpp that comes in the Arduino library of the same name. Mind you it also comes with the warning "May contain the worst bugs known to mankind..."

Given that the class doesn't have things like fillTriangle and so-on (it does have drawLine) I wonder if it might be better to simply instantiate another copy of the my_SharpMem class (minus the SPI connectivity) and use that as my canvas. It will have all the goodies like drawVectorString() that I've added already. All I need then is the rotZoomBlit() to cut and paste bits from one framebuffer to the other. It doesn't need to be full size (though there might be advantages of making a full-size backup copy ...). I'll think about that during my next sojourn in the shower. (I get may best thinking under the shower. Maybe it's the hot water massaging my brain...) Yeah, full size is 'only' 6k and it is handy to be able to make a complete copy of the display, so I'll do it that way.

Fangs for the Memories...

I learned (relearned) a few new things about Arduino (and C++ in general) malloc and memcpy today. First is that Arduino's malloc never fails even when it should. I asked for 0 bytes and it didn't give me a NULL. I asked for more memory than it actually had and it said "Yeah, sure, here you are" and gave me a non-null pointer. It's BROKEN! And then memcpy. Stupid bloody miserable thing has destination as first arg and source as second, not the other way round as I would expect. Aaaaaarg(h)s!

Anyway, ranting aside, I got a second framebuffer and copy coded up and they work. Now I can see about labels.

Eventually got this to work. Had all sorts of fiddly problems implementing a tiling system to copy chunks from the map to a backup, draw on the map, then restore the map from where I had saved it previously. Most of the problems came from edge and corner cases where memory wrapped or overran or when I wanted to copy chunks close to and edge and had to compensate the width of the chunk. Add in byte alignment and screen rotations and it was a 3 day nightmare to get going. Eventually I did however. One of the things I could really have done with during this session was a good, well-documented, relatively easy to understand and use debugging tool for breakpoints and single stepping whilst watching variables. Unfortunately what I had was the Segger JLink EDU. Read on for more ranting.

Debugging the debugger

OK, there are lots of people out there that use the Segger JLink EDU module and love it. It's a cute little thing with a USB connection and a cable that, with some hacking, I could connect to my Cortex M4 Feather board's SCLK and SDO pads. (Hacking because the miniature header provided with 0.05" pin pitch instead of usual 0.1".) Then I had to find out how to use it, preferably with VSCode and the cortex-debug extension.

Lots of tutorials and blogs and advice on how to do this, but as with many flexible software/hardware tools, the flexibility just means that there are lots more ways to get it wrong. Plus everybody seemed to have a different way to use it. And give it its due, that flexibility would be great if I needed it later (and I will) but I just wanna get started!

Eventually I found things like: Visual Studio Code for C/C++ with ARM Cortex-M: Part 4 – Debug | MCU on Eclipse and the essentials seemed to be changes that needed to be made to a VSC file called launch.json

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"cwd": "${workspaceFolder}",

"executable": "D:\\Arduino\\myProjects\\sharp_gps\\build\\sharp_gps.ino.elf",

"preLaunchTask": "rename_headerfile_in_build_sketch_folder",

"name": "Debug with JLink",

"request": "launch",

"type": "cortex-debug",

"device": "ATSAMD51J19A",

"runToEntryPoint": "main",

"showDevDebugOutput": "none",

"servertype": "jlink",

"interface":"swd",

"runToMain": true,

"serverpath": "D:\\SEGGER\\JLink_retry\\JLinkGDBServerCL.exe",

}

]

}There are other files in my workspace, the sharp_gps folder, as well like settings.json and arduino.json (where I had to change the "output": "./build" from "../build"). One thing I learned was that it would be nice to turn off compiler optimisations by changing the boards.txt file in the Arduino files so that when debugging is turned on it didn't optimise, but I found that eventually getting it to do that my project code wouldn't run. It kept hanging in the GPS module so eventually I reversed that and had to put up with the strangeness that resulted with variables being 'optimized away' and code skipping around when singlestepping. Here is how you do it however:

Find the boards.txt in C:\Users\terry\AppData\Local\Arduino15\packages\adafruit\hardware\samd\1.7.8\boards.txt and then find the board in question (Feather Cortex M4 Express) and find the adafruit_feather_m4.menu.debug.on.build.flags.debug=-g

line and add a -O0 to the end. (That's a minus capital 'O' zero)

Then in VScodes' project file, find the line about debugging and change it to debug=On. However, initially I thought it caused the GPS part of the code to hang, so I needed to take it out again. Later attempts with optimisation turned off in this way seemed to work OK, so I left itthat way. It does use twice as much code space (30%) but it makes debugging with the JLink sooooooo much easier!

#TC made a change to make the debug version have NO optimisations

adafruit_feather_m4.menu.debug.on.build.flags.debug=-g -O0

#adafruit_feather_m4.menu.debug.on.build.flags.debug=-gAlso, needed to change this file.

//This is the D:\Arduino\myProjects\sharp_gps\.vscode\arduino.json file in my //project folder. Change that debug=off to debug=on. Note that it might impact //on whether the project runs at all though.

{

"configuration": "cache=on,speed=120,opt=small,maxqspi=50,usbstack=arduino,debug=off",

"board": "adafruit:samd:adafruit_feather_m4",

"sketch": "sharp_gps.ino",

"port": "COM15",

"output": "./build",

"comment": "TC I changed output for ./build from ../build",

"debugger": "jlink"

}Eventually then, I could hit F5 in VScode and the jlink would be found and started and the code on the board would be run to a breakpoint and I'd have the ability to continue or single-step and set more breakpoints etc. Lovely.

One of my other boggles was that the debugging session wouldn't treat one or more of my source code files as if they were part of the project and I had to use the source code files it had copied instead. Fine, be like that. I found that eding arduino.json in my project folder .vscode folder and changing the "output" to ./build from ../build helped a bit but not enough. Eventually I got to deleting the sketch folder that gets created every time I 'verified' the code and that forced the debugger to find and use the correct source file. It's odd because it didn't have this issue with the sharp_gps.ino file but then again it renamed that file in the sketch folder to shatp_gps.ino.cpp so maybe that explains it... Wonder if I can make it delete/rename the build/sharp_gps.h file? (Eventually I worked out how to do this with a "preLaunchTask" in the launch.json and a task defined in tasks.json in my .vscode folder in the sharp_gps project folder. Seems a brutal way to do it but I couldn't figger any more subtle way to tell it to "use the original bloody .h file instead of inventing your own you prick of a process".

{ //tasks.json in .vscode in the project folder

// See https://go.microsoft.com/fwlink/?LinkId=733558

// for the documentation about the tasks.json format

//note that you can't use '&' to get two commands on one line.

//Powershell doesn't like it. So I used /n and that worked ok

"version": "2.0.0",

"tasks": [

{

"label": "rename_headerfile_in_build_sketch_folder",

"type": "shell",

"command": "del build\\sketch\\copy_sharp_gps.h \n ren

build\\sketch\\sharp_gps.h copy_sharp_gps.h"

}

]

}Then there was the question of how to get the JLink to load new code after I recompiled. The usual Arduino:Upload command available thru VSCode using the bootloader method wouldn't work when I had the JLink connected and in a debugging session, nor even when I stopped the debugger. I searched in vain for a debug button/command labelled 'reload the code' and even contemplated a command-line approach using JLink commander. However, it all appeared to be unnecessary. I eventually worked out that if I did a change to the code and then did a 'verify' (which is a build) but not an Upload, the debugging process seems when restarted does a reload of the binary or hex file without telling me. I do have to do a disconnect button-press and then F5 to restart the debugger again but the changes where there all right. Sigh. Sometimes explicit is better than 'do it whilst he isn't looking'. It'd be nice not to have to disconnect/reconnect (virtually) the debugger but I can live with it.

Mind you, I do like it that the code gets loaded for me and it is much faster than the Arduino:Upload (which decides it has to re-compile every-freakin'-time)

Oooops, VSCode just crashed. It does that sometimes. Fortunately a restart quickly gets it back. Dunno if this has anything to do with cortex-debug extension. Glad I wasn't in the middle of a tricky bit of singe-stepping when that happened.

Just a'scrolling along, singin' a song...

Here's a nice user interface that looks like it comes straight out of a retro Ipod. 5 buttons and a scroll wheel in one. Probably not waterproof, but no electronics in it so probably not water affected easily either. Built around a mechanical rotary encoder and press buttons. Not as pin efficient as my analog 4x4 key matrix that only needs one (analog in) pin plus ground, but maybe more easily connected to interrupts.

GEOrge and JaSON

I have discovered GEOJSON. (TLDR, I don't think it goes far enough in reducing the xml for my purposes) It is a JSON format for Geographical Information Systems and is quite similar to what I had evolved for my own purposes (read_osm_to_micropython.py) when I converted XML Open Source Maps to a JSON format, removing one level of abstraction (i.e. the xml listed nodes which had a coordinate and them listed ways which then referenced the nodes by id, which meant the nodes had to be looked up. Nice if you have the memory, but I don't. So I just merged them so the ways were self contained and had the coordinate lists immediately. This worked because in the main the nodes were not really referenced by multiple ways and merging them meant they could be read from file in a single-pass fashion. Much faster.) GEOJSON is supposedly much faster and smaller than XML as well so I think I might end up adopting it.

Another thing of interest is the online tools for extracting features of interest from OSM. For example from Extract, Convert and Download Data from OpenStreetMap (mygeodata.cloud)I can extract just Accommodation which includes shelters and huts. However, I find the filtering a bit hit and miss. e.g. in the Hotham area, using predefined filters like Accommodation, Alpine Huts, I couldn't get it to find DerrickHut, even though with no filter it was shown. I had more luck with the Tourist filter. Anyway, I think it is not to be trusted. I prefer to do my own filtering thank you.

So, an online tool that gets ALL the OSM info as GEOJSON is OSM to GeoJSON Converter Online - MyGeodata Cloud. You have to get the OSM XML file first but you can do that from Export | OpenStreetMap

I used it with a OSM XML map of Mt Hotham area and it produced about 10 layer files separately showing roads and rivers and amenities (including huts) and forested areas. Limited to 5MB or 3 times a month before charges. I can live with that for now, if it proves useful. (There are other xml to geojson tools...) Size-wise, very similar to the output of my read_osm_to_micropython.py code output but probably including things that I have missed (like huts represented as nodes, for example). It differentiated between things represented by points or by polygons. I have to think about how to use this. I can see that having pre-sorted layers does give me options to speed up map loading/scrolling, zooming etc.

Below is a line from amenity-points.geojson that has the info for Derrick Hut. As you can see there is an awful lot of crap there I care nothing for, like what the heck is "bottle":null supposed to mean? Am I going to keep all that shit or get rid of it? Like, if I just deleted all the nulls, half of it would go away! I can see from a quick Google search that other people feel the same way and there are various posts in GIS forums discussing how to do it.

The only info of real interest are the "name": "Derrick Hut", "amenity": "shelter", and the coordinates.

{ "type": "Feature", "properties": { "osm_id": 251150051, "historic": null, "name": "Derrick Hut", "amenity": "shelter", "tourism": "wilderness_hut", "ele": null, "natural": null, "tower:type": null, "man_made": null, "operator": "Parks Victoria", "ref": null, "highway": null, "public_transport": null, "access": null, "shop": null, "aeroway": null, "waterway": null, "memorial": null, "shelter_type": "weather_shelter", "information": null, "wikidata": null, "alt_name": null, "shelter": null, "bus": null, "departures_board": null, "network": null, "direction": null, "fee": null, "bin": "no", "lit": null, "bench": "yes", "bottle": null, "clothes": null, "cuisine": null, "bar": null, "old_name": null, "outdoor_seating": null, "internet_access": null, "diet:vegetarian": null, "diet:vegan": null, "ford": null, "unisex": null, "toilets:disposal": null, "wheelchair": null, "changing_table": null, "toilets:handwashing": null }, "geometry": { "type": "Point", "coordinates": [ 147.1606277, -36.9711705 ] } },

It is entirely possibly that the "properties" are predicated by the "type":"Feature" and maybe there is a less uselessly verbose way to extract the data (I didn't see an option to drop null properties when converting, but maybe I'll look again)

As far as I can tell so far the layers are independent and don't cross-reference. However, be careful. I see that Spargo's Hut is in only the amenity_points file but Derrick Hut is in 3 separate files.

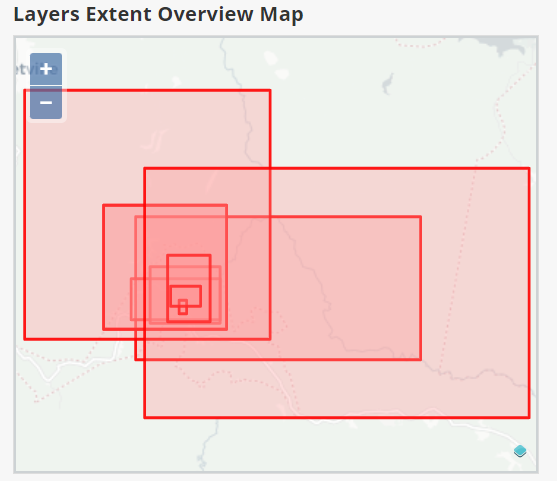

Also, the converter made available a geojson representation of the bounds as a polygon that I pasted into a file called bounds.geojson. Probably it covers the extents of all the layers (which stick out way beyond my original selection) and that's not so good for me I may have to trim it back considerably. And make it rectangular. Hmm.

It does occur to me also that I will need to de-nest the representation so that each separate point or line or polyline can be read in separately rather than trying to read the entire file into a nested json database. Hmm. More I look at it the more I feel I may as well stick to xml and do my own json-ifying. Geojson might be less cross-referency and verbose than xml but it is still to chatty for my liking. However the idea of pre-selecting the layers into huts, tracks and water might be useful.

Is Binary A better Way?

I've seen ways representing roads and administrative boundaries that have many thousands of coordinates. As JSON constructs these can take up as many as 100K bytes. In binary they could occupy significantly less space, like 8K bytes per 1000 coordinate pairs. (4 bytes per 7 digit precision latitude or longitude, for e.g. )

The difference this makes in read speed from SD and storage space in memory is very considerable. It makes all the difference when it comes to the question: “Can I hold this entire map in memory for panning and zooming?”

Precision needed for a gps feature for hiking is about 10m. This needs a longitude of +/- 179.9999 so a float of 7 digits is adequate, this means 4 bytes as a binary. (Going to 1m would need 8 digit precision)

Also save points if we don’t record points closer than 10 m and convert ways like hut locations a single points rather than collections of points. If it is worth the fiddling.

There is an Ardubson library that might help me. I remember too that my early attempts used BSON when that was all there was (no JSON support at that time for CircuitPython)

I'd have to modify my read_osm_to_circuitpython.py code to convert OSM maps to BSON instead/as well as JSON, but that wouldn't be too bad. I'll play with that idea first to see if it could produce significantly smaller map files. Format could otherwise be the same, text tags and name, and coordinate array, just that the coordinate array would be binary floats and there'd have to be a size parameter so I knew how many to read. Fairly simple... And if it gets me away from having to reserve as much as 100K for a single way that'd be a good thing. 100K would be enough for 50K coord-pairs at 8 bytes per coord-pair is 6,000 coordinate pairs. That's about enough for an entire map ( Hotham has less than 4500 lat/lon pairs) but at the moment I need that much memory for sometimes a single road when stored as JSON! Faster to read, can keep it in memory, all good outcomes. Just can't read it anymore by eye. Awwwww. Could keep the bounds file as JSON because I do tend to mess with that manually. Hmmm.

I tried to use BSON in the read_osm_to_micropythoin.py code and ran into the problem that it represents all decimals using a 64 bit format and even worse, the overheads of the binary protocol actually INCREASED the size of the output file beyond that of the JSON. So I tried a trick, converting the floats to ints before encoding to force the use of 32 bits. What I noticed is that the JSON file size also decreased, naturally, as did the BSON file but the JSON is STILL smaller than the BSON file. Using a factor of 10000 to convert floats to scaled ints saved me 100KBytes in what was a 380KByte file. This is interesting. Maybe I should keep the JSON and just use scaled ints to force a precision that gives 10m resolution. I.e. int(-36.9761196 *10000)-> -369761 and then /10000 to get latitude back gives -36.9761 where 0.0001 of a degree =10m-ish. This is adequate for hiking map. I could round to nearest to reduce loss of precision to 5m. Note that the gps reading would still be at the highest resolution( whatever that is these days. it used to be 30m but I think it's now +/-3 m), it is only the map representation that suffers, and then it is still comparable to the GPS accuracy so it probably won't be noticed at all.

There is also the possibility after this integerisation to run thru the coord list and eliminate duplicates where suddenly two successive coords will be exactly the same because they were within 10m of each other. Or go even further to reduce the number of coords in a way by some other form of decimation.

I will try this and see how it looks and if it improves map loading speed and memory usage.

Picture this...

Of course, all this is overlooking the idea of storing a map as an image. I've resisted that because I want the ability to search the maps for features and that is harder/impossible if they are just bitmaps. I could use a combined approach and only store some things like hut locations and the roads and stuff as image, I suppose. Sigh, so many decisions that would come back to byte me...

DEM bones, DEM bones dem Digital Elevation Map bones.....

Databases containing the heights of the land can be got from:

They can be HUGE. 23G? they come as .adf files (which are rasterised tiles) each about 1G in size. At that rate, one or two or three would do for all of Victoria, probably. That'd fit on the SD card OK but reading and drawing might be very slow. Look in d:\map_dem\aac46307-fce8-449d-e044-00144fdd4fa6 for them. hdr.adf seems to be the starting point?

Viewers

How to look at them on a PC?

Grass Windows (osgeo.org) GIS software can be used. It's free. (I tried it. Steep learning curve. Eventually managed to get into load the database I got by pointing at hdr.adf and using the r.in.gdal command (via files import raster map)) It took HOURS to load and showed a colored map of terrain. It appeared to save OK in its workspace so I can reload it and it is fast to reload. You can also load individual .adf files (which are only a mere 1GByte or so each) in a similar way.

(file->import rastermap->import of common raster formats [r.in.gdal] and then browse for an .adf file. It then reports 'importing' and appears to do nothing for a LONG time, initially without even so much as a green bar (at least it showed a green bar quickly when I pointed at hdr.adf even though it took hours overall). Let it be... Even for a single .adf file it takes hours.) It appeared to not matter which .adf file I started with, it appeared to read the entire dataset anyway. Hmm.

Has a corrupted file z002010.adf that resulted in the missing chunk of Queensland. That was one GBytes worth so it gives an idea of what I'd need for map tiles. Not so bad, probably a few hundred MBytes at at time for a tile the size I'm interested in, about 10Km square. Probably a bit more than the vector data for a road map though. Has to be, really, it's more dense. Though it is binary so maybe more efficient. Haven't worked out how to extract chunks yet. BTW, Grass is scriptable via Python.

Python (maybe) has modules for working with these sorts of files that I could use to preprocess / extract tiles.

Using DEM on a weeny monochrome screen with not much memory?

I'd want some way to extract tiles and stick on SD card that my little embedded Cortex M4 with limited memory could deal with to display topography. Either as a dither/shaded slope map or as a profile cutting terrain given a direction and starting point (or series of points in a GPX) or as a wiremesh or something easily displayable in monochrome.

I can imagine a profile with the direction of the cut automatically stepping thru 2pi radians might be useful... What would be cuter would be to combine an electronic magnetometer to show the profile as you point the device, giving an idea of 3d shape as you spun it on the spot. Fun times!

Another idea would be a vector field diagram showing magnitude and direction of slope, maybe altitude as well using the length and direction of the vector for the slope but the thickness of the vector in the image to indicate altitude (higher->skinnier to make high points whitest) Yet another might be a simple two color (B/W or dithered) map showing where slope is above some threshold, say 10%. This would highlight valleys and ridges. Might be useful to highlight minima rather than absolutes so that 'best' choices aren't obscured.

Another thought is to show the contour for the current position as white for low slope and gets darker dithering as slope increases. This would have to be recalculated frequently so might not be that good.

Be useful to look at ascii dithering as that would be very fast compared to something like Floyd-Steinberg. Can produce line artifacts though if you use the same character for a particular density. Fortunately there are a lot of chars that have similar density and so you can randomise. Also it depends on font. And if there is a bold font. I could use concentric circles, or hashing or lots of other approaches. The idea of thickened vector arrows is attractive.

.,:;*9&@# .~:17235469#80%@ A couple of experimental density rampsCutting the GRASS

Commands to extract the DEM data for a smaller region from the all-australia one I appear to have, using the GRASS GIS app.

g.region set computational region. Different from r.region which re-adjusts the region of an existing raster map(I think). e.g. for use with r.clip to set bounds of clipped map.

r.clip (Is an addon so you need to add it using GRASS settings->addon extensions and then use it via the command-line) makes a new raster map from an existing one according to region settings

r.contour makes contour lines. Not sure what the units are. Manual implies metres. But using step of 20 very quickly made a vector map with only a few tens of contours over all Australia. Must have been like hundreds of metres in spacing. 0.020 resulted in something more closely spaced. Takes a long time to run...

e.g.

Use preferences projection to set lat/lon to degrees rather than deg:min:sec

g.region (using the gui and set north and south, east and west lat/lon to -36.84 -37.04 147.08 147.29

r.clip input=w0010@PERMENANT output=hotham

I've tried r.contour and r.relief but they don't work. I think there is some setting wrong or missing.

However, r.slope.aspect elevation=hotham@PERMANENT slope=hothamSlope aspect=hothamAspect pcurvature=hothamCurve tcurvature=hothamTanCurve did produce interesting maps, especially slope and aspect (though aspect seems inside-out to me (degrees counter-clockwise from East) so I might need to change that somehow)

BTW, the data for the maps seem to be in

C:\Users\terry\Documents\grassdata\demolocation\PERMANENT\fcell

and the Hotham map appears to be 1.7MBytes which, if that is all the data, isn't too bad.

Getting out of the GRASS

I will eventually want some way to get elevation maps out of the GRASS and into my Cortex M4 microcontroller. Given that there are tools to load .bmp files in the Arduino world that I currently inhabit, I figured that'd be a start, if I can get from GRASS to BMP. There's also JPEG but I dunno that Arduino libs support that. (It's mathematically much more intense anyway) Also PNG, GIF etc.

Given that DEM data can be simply a scalar field (i.e. a single value for each pixel representing elevation or slope or something) then a grayscale image would be OK. Slope, which is a vector field (direction and angle) might not be so easy, but I might be able to calculate slope from elevation myself onboard without too great a cost. It'd have to be subsampled anyway because representing it on a 2 bit display (i.e. black and white, noteven grayscale) would be tricky unless I settled for a simple threshold such as ignore direction and just threshold slope, say black for above 20% (which is hard work to hike on) and white for below.

Here's how you'd do BMP, but it objects to the floating point representation that the Hotham map uses. Looks like I'd need to explicitly scale it.

r.out.gdal --overwrite input=hotham@PERMANENT output=hotham format=BMP type=Byte scale=0.1 THIS DIDN'T WORK. READ ON

maybe if I rescale first using:

r.rescale input=hotham@PERMANENT output=hotham_255@PERMANENT to=0,255

g.region raster=hotham_255@PERMANENT //set the region to be exported

r.out.bin -i input=hotham_255@PERMANENT output=D:\maps_dem\myExports\hotham_255.bin bytes=1 //out as binary

I didn't use .bmp output, opting for binary instead because the libs to read binary in Arduino-land were either 24bit or 1 bit. No 8 bit. SO I wrote my own binary image reader. Pretty damn simple.

Putting the GRASS in the BIN

There is an option in the GRASS export to write to a binary file instead of a bitmap one.

r.out.bin -i input=hotham_255@PERMANENT output=D:\maps_dem\myExports\hotham_255.bin bytes=1

and if you set it to integer output (the -i) and the number of bytes per cell to one and apply it to the hotham_255 map (which has already been set to 8 bits per pixel scaled) then you get a grayscale file without the bitmap format header. Of course you'd have to supply info like lat/lon registration and scaling to meters somehow, but you'd need to do that anyway. It just might be easier to read this binary file into the Cortex processor using C++ file handling and not need a 'special' library to do it. Altitude, slope mag and dir can be in 3 different files. Each file is about 500KByte for the 689x752 resolution map. Arguably I don't need that res and could maybe get away with much (like a factor of 10x10=100) less.

Interestingly, this binary export can be applied to the DEM map even though it hasn't been rescaled to 0-255 and produces a similar sized file. The values are not the same of course and I dunno how it deals with 'out-of-range'. Does it rescale automatically? Truncate? Wrap?

On windows you can read a bin file using powershell and format-hex | more

It's all a matter of altitude

I have managed to read in the binary file to my Feather Express M4 processor and with a simple single pass operation whilst reading from disk without needing to store anything (except pixels in the display buffer which are dedicated memory anyway) I can get a simple contour out of it. Basically the operation:

if(p/16 % 2==0){ //quantise and band on odds and evens

pv=0; //mark this contour line. 0 = black

g_display.drawPixel(x,y,pv);

}It's not quite what I want but it does show promise. Does not make ridges stand out from valleys though, which is what I want.

A different approach was to calc the W/E and N/S slopes by differencing altitude as I read it in.

dx = abs(alt-lastxAlt); //lastline is the previous pixel(alt) in the x (west) direction

adx = abs(dx);

dy = alt- lastlineAlt[x]; //lastline is a memory array that contains the altitudes from the previous line

//i.e. to the North)

ady = abs(dy);

ddx=dx-lastdx;

ddy=dy-lastlinedy[x]; //calc second derivitives so i can tell maxima from minima

if(!((adx<=1 && ady<=1) && alt>128)){ //low slope at more than half altitude? In West/east and N/S

pv=0; //mark this contour line. 0 = black

g_display.drawPixel(x,y,pv); //I bet there's a faster way... but this takes rotations into account

}

lastxAlt=alt; //remember this altitude to use to calc slope from west

lastlineAlt[x]=alt; //also remember it to use to calc slope from north

lastdx=dx; //and remember for second derivitive

lastlinedy[x]=dy;

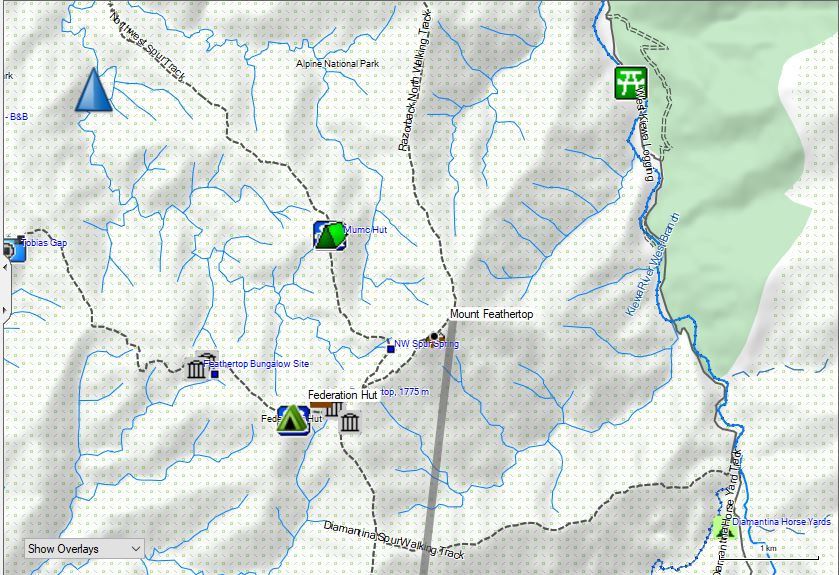

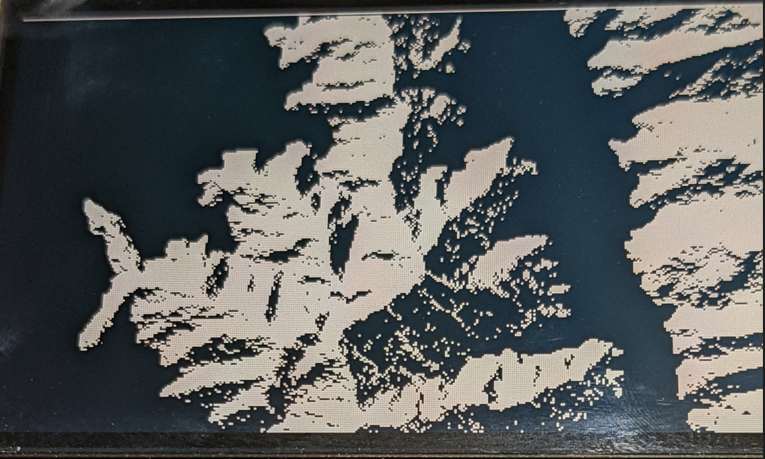

This produced an interesting image that highlights ridges and alpine meadows (and maybe creeks). To relate this image to the original altitude image from GRASS, this is the top left corner, as shown here. If you squint you can see the correspondence. The white is like a skeleton of the yellow higher altitude stuff. You can see from the topo map of the area (Mt Feathertop) that the slope map predicts the various paths along ridges, like the Bungalow, Northwest and Razorback and Diamantina tracks. Note that this is a top-down view unlike some of the psuedo-3d views I discuss later.

A thing to be careful of is that rivers also produce 'trails' using this process and one would prefer ridges. (I think this happened to produce that 'trail' between the Northwest Spur and the North Razorback tracks ). Would need some way to detect slope minima and discriminate rivers. Mind you, detecting rivers would be useful as long as you could tell the difference. Using the second derivative of altitude might do something useful to tell minima from maxima. Noisy though.

Note also that something very like an aspect map can be produced simply by plotting white when the slope is mostly positive and black when it isn't. This doesn't have the same problem with discriminating between creeks and ridges, possibly because the human interpreter is subconsciously doing something to eliminate it. At least at this scale. I might be able to see creeks causing false ridges on the Southeast slope of Feathertop though. This is still a top-down view and a plan-view 'ways' file or GPX track could be drawn on it without distortion.

The clause of alt>128 clearly shows low valleys by imposing a threshold but maybe can be dropped.

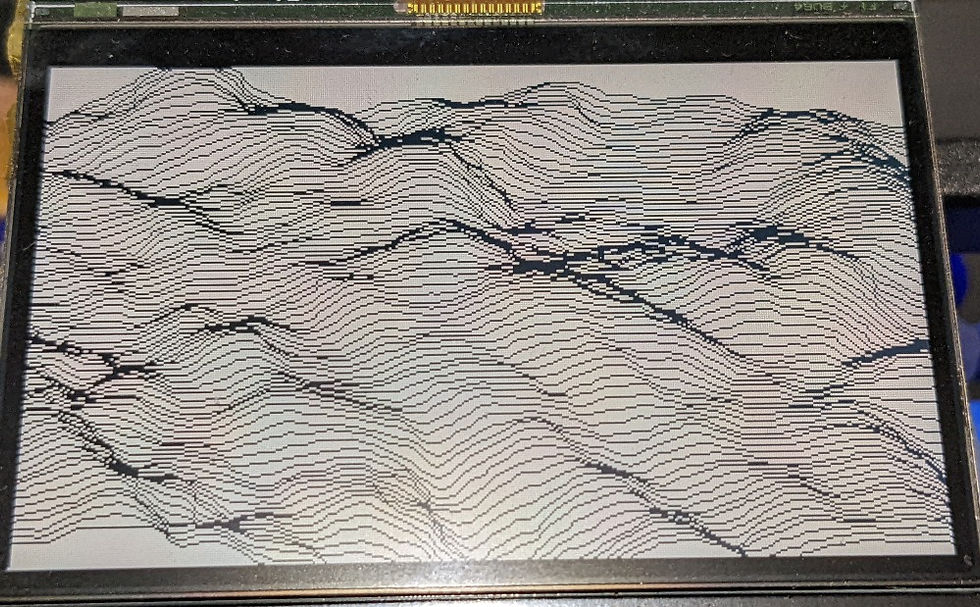

Another interesting (and less cluttered) way to plot terrain is to convert the height values as you traverse the rows of the DEM file into offsets in the display and join the points by lines. As shown using the DEM data for Mt St Gwinear above (note that this is a new area, not the same as the previous DEM maps which were of Mt Hotham and Mt Feathertop), this produces a cross-sectional view. The effect of also adding in a move in the y direction on the display as you go down a row in the DEM file is to make the psuedo-3D view look as if it is from a point raised up above the map. This, if the spacing between slices is right, gives a very nice idea of the terrain, far better (for me) than a contour map. Ridges, at least the ones running in a direction away from the point of view, stand out. Absolute height is difficult to judge though, and ridges running across the viewline might be obscured as would ridges behind mountains or other high terrain. Possibly being able to rotate the map would help, though it would be fairly slow (tests show not so slow!). Also, if you want to lay a plan-view 'ways' map or gpx track on the terrain, you would have to take the altitude of the ground into consideration when plotting it. Mixing an otherwise top down map with this '3d' one would be somewhat misleading, causing an offset of the tracks from the terrain. This would then make plotting the 'ways' slower, and zooming and panning would be slowed down as well.

This terrain plotting is best done at a fairly zoomed out level and is less useful when zoomed in to what I would consider appropriate for 'tracking' oneself. so maybe the two modes need not be used together. Again I long for some color to make the tracks stand out from the elevation plots!

Nonetheless, this style of representing terrain appeals to me, even if I just use discrete points to show a path 'stuck' to the ground level. I worked out that I 'could' hold the DEM data in a memory array but it used about 100Kbytes and that's feasible if I don't do the JSON deserialisation of OSM map 'ways'. (The deserialisation buffer needs to be 70KBytes or so to deal with very long 'ways' with lots of points in them). It is really pushing the limits to hold the DEM data in memory if I still want maps with reasonably big paths like highways on it. So I did an experiment to see how fast (or slow) getting altitude out of the DEM file on the SD card each time (rather than hold the file in memory) would be for random points on a path and was surprised. 1000 points at random on a W->E path took much less than 1 second, including plotting the background terrain and all the 1000 points on the display. Like this, where I am looking South->North over Mt St Phillack near Mt St Gwinear:

Now, I realise that for this to be truly useful, given that I don't want to be walking in an W-E direction all the time, that the map needs to be rotatable to give a view orthogonal to a direction of travel and that is best plotted along the X axis of the display with the ground height in the Y axis. I'll tackle rotated maps next. For random directions it might be slowish, involving sines and cosines and transforms but I could restrict it to 45 degree steps and limit the trig, or use a 15 degree lookup table maybe.

It might not be up to animations and all, but maybe a static view of a meandering path from a GPX with the viewpoint able to be shifted and rotated with minor delays of a second or two. Or in the case of no pre-determined path, being able to see what ups and downs a direction of travel would result in would help in pathfinding. Ultimately, an algorithm for finding a 'best' path given endpoints and terrain in between would be fun!

I did a simple test in which I read the DEM file in a random access fashion one byte at a time using seekSet() followed by readBytes(&h,1) to simulate getting height data along slices not aligned W->E (which corresponded to the file organisation enabling it to be read a row at a time ) The speed at which this random-access byte-at-a-time method rendered the height slices was imperceptibly (to the user) different to the case where the DEM file is read a row at a time. This gives me hope that rendering an (almost) arbitrary view angle (which needs random access) or even animations of rotating the map, will be fast enough to be useful. Movies to come!

Urk, I've suffered a cognitive disruption. I plotted the same terrain as the St Gwinear map above (which climbs from South to North and the view above is looking from S->N), but this time looking from North towards South instead and to be honest, I can't tell if the terrain is descending (which it should be). To me it also looks like it's ascending and that can't be right. It's like the issue I have with contour lines not knowing from looking just at the lines (and not the numbers which I can usually barely trace or read anyway, Grr) if they go up to a ridge or down to a valley. No Pickies yet because I might come up with a fix for it (or I might have made an egregious mistake) I might need slope arrows. Yuk.

Here's a short video showing how quickly the terrain renders as it goes through a scan of a parameter that shows the map at a different virtual observer altitude, changing the apparent tilt. Map is the Mt St Gwinear DEM at 30m res from carpark including Mt St Phillack and over to Baw Baw ski area. I'm looking from the North and Mt St Phillack is more or less in the centre. You can see that it is pretty fast, with about a 0.5 sec to rerender and display. I imagine using 8 keys to let user step back and forth in tilt and rotate and zoom would be enough of a UI. Panning too, I guess. Maybe 10 keys. It's OK, I got 16 to play with.

There's a few things I need to work out here. One is that the abrupt end of the DEM data at the far side of the terrain plot makes it look like a sharp cliff and that isn't necessarily the case. I don't want to re-invent Google Earth here, just want enough options that the user can adjust things to get a good idea of what's ahead, or along a path. That's the other thing, I need to be able to show a path, pinned to the terrain, with a small number of Points of Interest like huts, and a plot of the height profile along it. There's enough room on the display to show both the 3d terrain and the plot, if I am not too demanding. This is probably where I'll focus, rather than on hugely detailed OSM maps with lots of street names and expensive (i.e. lots of coords) boundaries and roads and things (which let's face it, my off-the-shelf GPS devices do better in many ways.) This way I'll be able to make sure that the info I really want (i.e. my path(s), huts and rivers and peaks, turnoffs) are available.

Just an ironic blast from the past here. The very first thing I did in 1984-ish when I made my very first computer capable of drawing graphics, based on a Z80 CP/M OS and using a TTL based monochrome graphics device of my own design drawing to a tiny green CRT which was rescued from a business computer the size of an executive desk, was to plot 3d terrain models. And here I am 40 years later doing it still. Admittedly on a much more portable platform.... and with much higher res and faster renders.

To do rotations, I implemented a random access method to read a single byte (height) from anywhere (given an X and Y coordinate calculated from Longitude and Latitude) in the DEM file and found that if I was reading up or down in X/longitude (i.e. the file position pointer only changed by +/-1) it took 10us but if I was reading up or down in Y/Latitude (i.e. the file position changed each time by +/- the number of bytes in the width of the DEM map) it took 3000us. Big difference. Really fast to draw the terrain when looking South to North or visa-versa but really slow when looking East to West or West to East, for example or any other direction. Because the terrain has to be drawn with the profiles going across the screen regardless of the angle of rotation it means that I have to get the heights not necessarily in a nice sequence. The usual way to deal with this would be to read the file into a memory buffer and access the heights from that.

I have previously tried to do this and the device has enough memory to make it possible but I run into issues with memory management because there is another bit of code that demands a huge buffer and that is the JSON decoder for the OSM map 'ways' (where a single particularly lengthy 'way' consisting of many many coordinate pairs (like a river or admin boundary) might easily chew up 70k bytes). Making a static allocation of memory for that AND for the DEM map array is just a bit too much. I played with dynamic allocation using malloc and it compiles but crashes. I am wondering, since I don't do JSON decoding and DEM file drawing at the same time, if I can just statically allocate a single large chunk of memory as a byte array and use it for both purposes at different times? And in fact for other possibly memory demanding tasks as well. I will investigate.

Debugging the debugger again...

I was just thinking to myself that the whole J-Link, VSCODE with Cortex-debug arrangement I had to programming and debugging my code on the Feather was working really well, and then it stopped working....

For sometime the Cortex-debugger extension had been threatening that I needed to upgrade the version of GDB from 8 to 9 or it would stop working. I looked around and couldn't see how I could do that as I was relying on Adafruit package for the GDB and I wasn't even sure if the J-Link would work with GDB 9 so I left it alone whilst it continued to work, figuring I'd fix it when I had to. Well it looks like that day came and after a bit of poking around I found others had the same problem caused by an auto update of the Cortex-debug extension recently. I worked out how to roll Cortex-debug back to 1.4.4 from 1.6.0 to get it to work again. Here's a link to discussion:

To actually do it, run the VSCODE program, click on the extensions manager (like a little picture of boxes with one disconnected on the lefthand panel) find Cortex-debug, click on the 'V' down arrow next to uninstall, choose install other version, select 1.4.4

Deja-view all over again

I had previously discovered that malloc() never says no. i.e. if you ask for too much memory it DOES NOT RETURN A NULL. In order to load the entire DEM file into a memory variable for fast access I had to work around this problem with malloc() by checking the amount of memory available before using malloc(). Once I did that, I could then adjust the size of the global DynamicJsonDocument g_waysDoc(50000) (which reserves a lot of memory for something else) down until there was enough mem to read the file into memory and then use malloc() for a buffer for the DEM terrain height data. Now that I am getting heights from a memory variable instead of reading the file every time the 'rotating terrain' view code worked very fast (7 frames per second!) even for viewing angles that were not aligned N->S or S->N (which had worked fast anyway even using file reading) Here's a movie of it in real-time (not sped up) stepping by 5 degree increments in a full 360. I stuck a big 'N' in to show what direction North is in that rotates with it. In practice I'll do something else to show what direction the view is in. I'm pretty happy with the performance and how it looks and am impressed at what it shows me about the terrain. I can see some valleys and ridges that are far less obvious from a 2d map, even with contours and shading. (It looks a lot less pixelated on the real Sharp screen. Possibly because the video was taken at 2x zoom) The point of rotation is more-or-less Mt St Phillack, near Mt St Gwinear in Gippsland Victoria, Australia.

BTW, whilst it is doing that rotating view thing, I am seeing a current drain of about 27mA on from the LiPo single call. A good part of this will be the GPS module (rated to pull 20mA all by itself) and a small flashing LED that I haven't disabled yet. If I can disable the GPS when I don't need it and get rid of that LED, I can reduce the power needs for when it is just displaying to less than 5mA. That sounds pretty good! (Actually it sounds too good. I am suspicious...)

One thing I need to work out (amongst many) is how to label points on the terrain. I imagine that single letters or numbers (not unlike the 'N' but smaller) would be OK-ish, stuck to the terrain at the height of the Point Of Interest they mark. Some system of seeing what the label name is will be required. Maybe I can repurpose that e-ink display for that? Hmm.

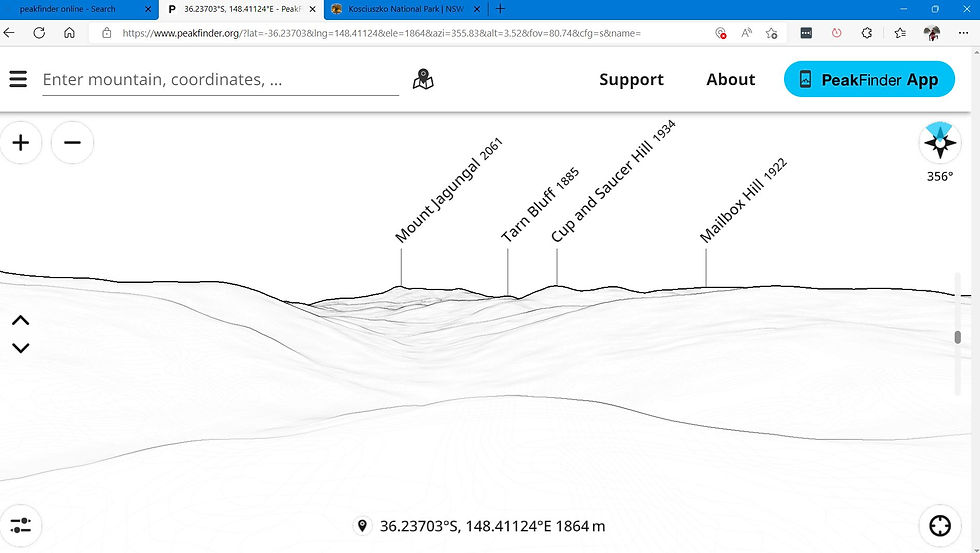

There's an App for That

I've found that there is an app for Android (and maybe Apple) and also an online demo of a product called PeakFinder that does something similar (but more sophisticated) to my terrain viewing efforts above. See www.peakfinder.org.

During a recent ski-trek we found ourselves in a valley looking down towards where we knew the hut was and trying to correlate what we could see against the paper map we had. The map's contour lines gave some clues but it was quite some guesswork to align the bumps and ridges to the map. It would have been useful to have this app then. I recreated the situation when I got home to see if it would be useful, using a photo I took at that spot.

Compare the two images above, one the photo and one the skyline shown by Peakfinder. If I squint and use my imagination a bit I can see the correlation and now I know which bump is 'Cup and Saucer Hill' which is great because the hut is directly across the valley from that. Not far to go now! Now, we really didn't need this navigational info at the time, to be honest but I can imagine times it would be useful... like that time we were aiming for a col in the whiteout and we couldn't actually see it. Could Peakfinder show us where it should be? Only if you can trust the electronic compass on your phone and lots of people have complained about them in this respect (and I've had issues myself with them). So, nice idea, but needs to be more reliable than your average smartphone. A more recent trip I tested it out on the phone in tat area and found that the electronic compass caused problems. It would be out by 20 degrees at times making it hard to tell which peak was which.